The Looming Energy Crunch: AI’s Growing Appetite

The rapid advancement of artificial intelligence is not without its shadows. As AI models become increasingly complex, the energy demands required to train them are skyrocketing, raising serious concerns about a potential global energy crisis. Greg Osuri, the founder of Akash Network, recently voiced these anxieties at the Token2049 conference in Singapore, painting a stark picture of the future if the current trajectory continues.

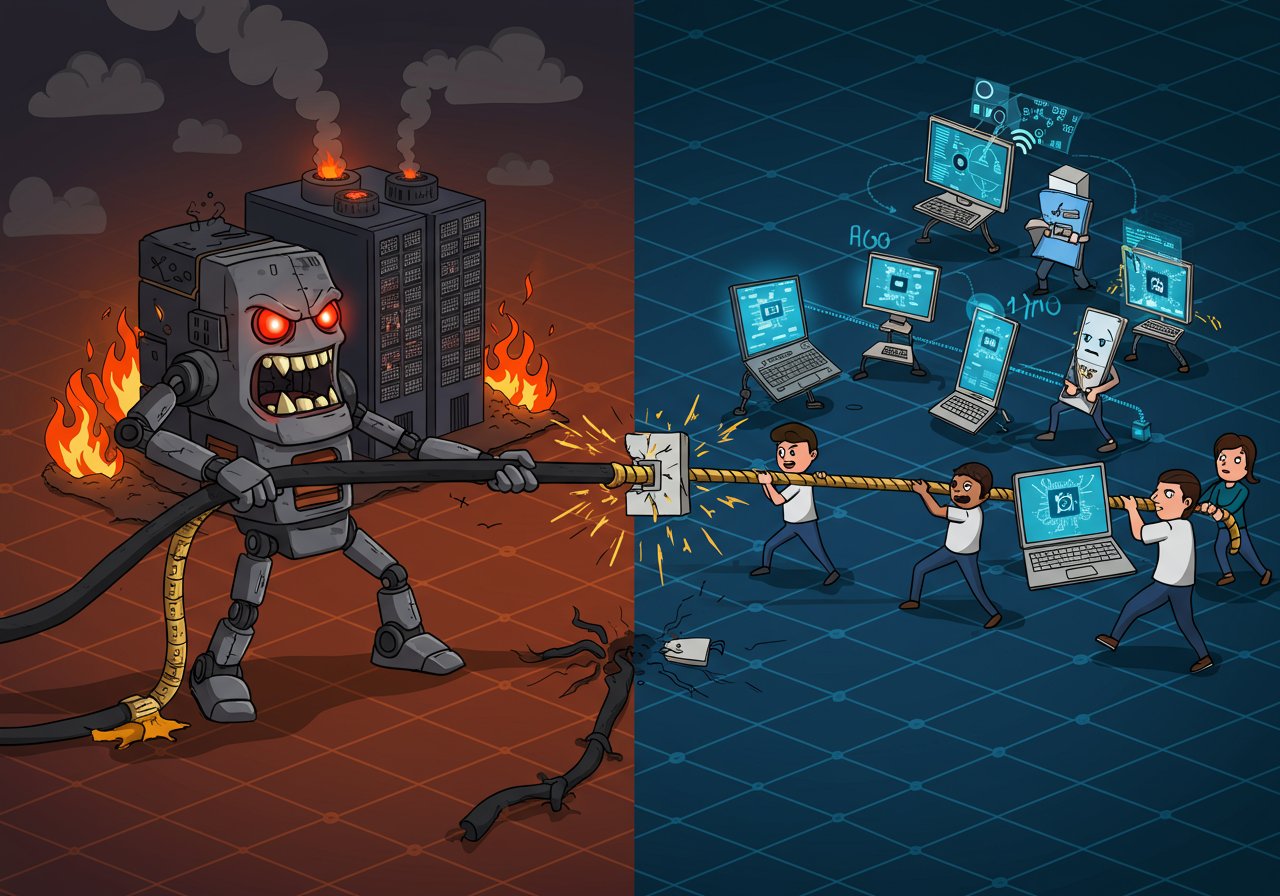

The Nuclear Reactor Analogy

Osuri’s warning is not hyperbolic. He points out that the energy consumption of AI training is growing exponentially, mirroring, and potentially surpassing, the output of a nuclear reactor in the near future. The driving force behind this unprecedented demand is the ever-increasing complexity of AI models, which necessitates vast amounts of computational power.

The Concentrated Problem: Data Centers and Emissions

A significant portion of this compute power is concentrated in large data centers, which are often powered by fossil fuels. This concentration exacerbates the problem, leading to localized spikes in energy costs and an increase in emissions. Data centers, already voracious consumers of energy, are driving up household electricity bills and contributing significantly to environmental pollution.

Decentralization: A Potential Solution

Osuri advocates for a decentralized approach as a possible mitigation strategy. He suggests distributing AI training workloads across a network of smaller, diverse GPUs. This contrasts sharply with the current model of concentrating computing power in a few massive data centers.

The Promise of Distributed Training

This distributed model envisions utilizing a range of GPUs, from high-end enterprise chips to gaming cards in home PCs. The concept mirrors the early days of Bitcoin mining, where individuals could contribute their processing power and earn rewards. In this context, users could be incentivized to contribute their computing resources to train AI models, creating a more accessible and sustainable AI ecosystem.

Challenges and Opportunities

The transition to decentralized AI training is not without its hurdles. Developing the necessary software and coordination mechanisms to effectively utilize a patchwork of different GPUs requires significant technological advancements. Additionally, creating a fair and sustainable incentive structure for those providing their computing resources is critical.

The Future of AI: A Call to Action

Despite the challenges, Osuri believes that decentralization is essential to prevent an energy crisis and build a sustainable AI future. By spreading the workload and reducing reliance on fossil fuels, it could alleviate pressure on energy grids, curb carbon emissions, and democratize access to AI development. This calls for a concerted effort from industry stakeholders to innovate and collaborate in realizing this vision.