DeepSeek Pushes Open-Source AI Boundaries with Prover V2

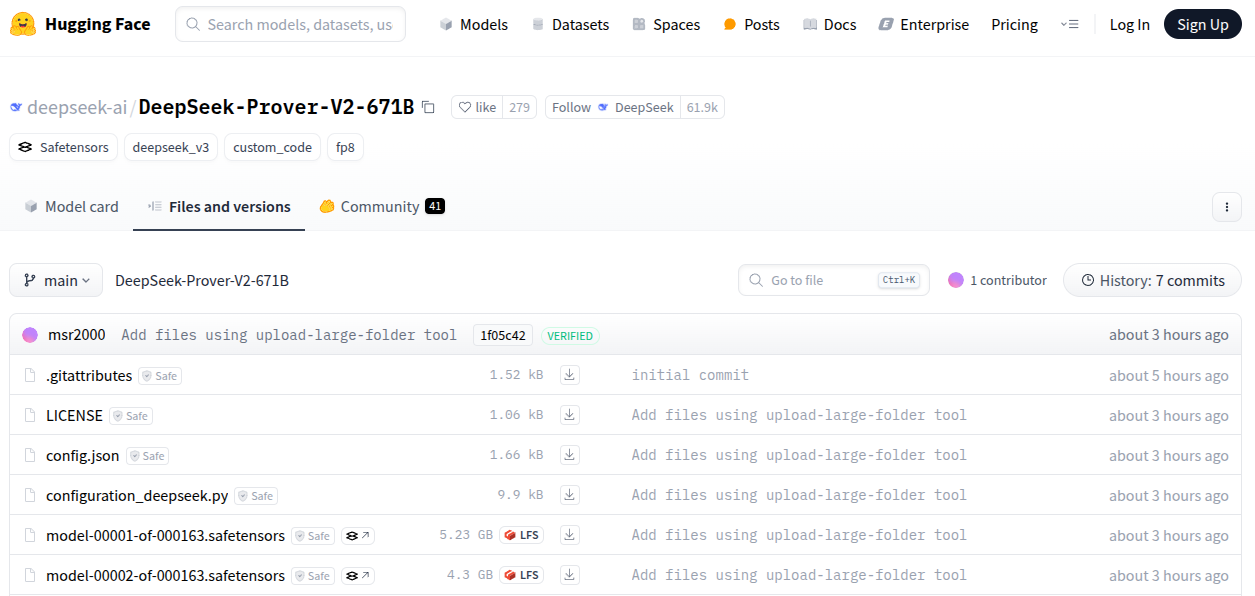

Chinese artificial intelligence powerhouse DeepSeek has made waves in the industry once again, this time with the release of its new open-source large language model (LLM) called Prover V2. This model, boasting a massive 671 billion parameters, is designed to excel in the challenging domain of mathematical proof verification. Prover V2 builds upon the success of its predecessors, Prover V1 and Prover V1.5, which were first released in August 2024.

DeepSeek uploaded Prover V2 to the popular hosting service Hugging Face on April 30, releasing it under the permissive MIT license. This move reinforces the company’s commitment to open access and collaboration within the AI community.

Building on a Foundation of Mathematical Logic

The original Prover V1 model, based on the seven-billion-parameter DeepSeekMath, was trained to translate math problems into formal logic using the Lean 4 programming language, a popular tool for theorem proving. This unique approach allowed Prover V1 to generate and verify proofs, demonstrating its potential in both research and education.

While specific details about Prover V2’s improvements are still under wraps, the significant increase in parameters suggests a potential leap in capabilities. It is likely that Prover V2 is based on DeepSeek’s earlier R1 model, which generated considerable buzz due to its performance comparable to OpenAI’s state-of-the-art o1 model.

Open Weights: A Double-Edged Sword

The decision to publicly release the weights of LLMs remains a controversial topic. Proponents see this as a democratizing force, empowering individuals to access advanced AI capabilities without relying on proprietary platforms. However, opponents worry about potential misuse and abuse of these models, highlighting the need for careful consideration of ethical implications and safeguards.

DeepSeek’s decision to release R1’s weights, and now Prover V2, has sparked a debate about the future of open AI and the balance between accessibility and responsible development. The accessibility of these models, combined with techniques like model distillation and quantization, is making powerful AI accessible to a wider audience.

Accessibility and Performance: A Balancing Act

Prover V2 is a testament to the advancements in AI development, particularly in the areas of model distillation and quantization. These techniques allow for significant reductions in model size, enabling users with less powerful hardware to run these sophisticated models locally.

DeepSeek’s R1 model demonstrates this progress, with distilled versions ranging from 70 billion to 1.5 billion parameters. These smaller models can even run on mobile devices, expanding the potential reach of powerful AI. However, it is crucial to understand that these techniques can impact model performance, requiring careful optimization to maintain accuracy and functionality.

As DeepSeek continues to push the boundaries of open-source AI, Prover V2 represents a significant milestone in the development of accessible and powerful AI tools. Its focus on mathematical proof verification highlights the potential of AI in solving complex problems and unlocking new frontiers in research and education.